Why Do We Need GPUs in AI – AI (Artificial Intelligence) has become increasingly indispensable in technology development today. Along with that development, AI research activities are increasingly flourishing all over the world!

Not only large research centers can research AI anymore, but even small and medium-sized enterprises are now fully capable of researching and developing AI.

In addition to the issue of human resources, the issue of hardware to serve this research work is also becoming more popularized. Specifically, today’s high-end graphics cards have focused and supported more AI research…

So have you ever wondered why graphics cards are increasingly used in the field of AI research? Well, if you have also wondered the same, let’s learn about this issue today.

Basics of AI

The full name of AI is Artificial Intelligence – artificial intelligence. Basically, this is a program programmed by humans, combined with Machine Learning (Machine Learning – a research field) to create a complete AI.

Normally, AI operates based on the block of data that the programmer loads => then it relies on the block of data it has to provide information, returning results corresponding to each different case. .

That means there is no godliness here, most of what current AI can do is thanks to what humans feed it. It’s that simple, guys!

What is needed to research AI?

First, I would like to affirm that the most core factor is still people. Indeed, AI research centers need to have a team of highly qualified information technology (IT) human resources.

In addition to a team of highly specialized programmers, AI research also requires experts in the fields of machine learning, sociology, even human learning… in general, all types of learning.

Because AI is artificial intelligence, it is created to do things like humans, so factors such as society and human behavior must be taken into account and are an important source of data for AI.

Second, of course – it’s the machines. Surely we have many times heard stories about young people who alone, with a personal computer, can write software worth millions of dollars.

But AI is different, it has enormous complexity and data sources, so it will be very difficult for an individual to do it completely on their own.

And computers serving AI research are always machines with very powerful configurations and especially often come with 3-4 high-end graphics cards.

Why choose (graphics cards) GPUs in AI research?

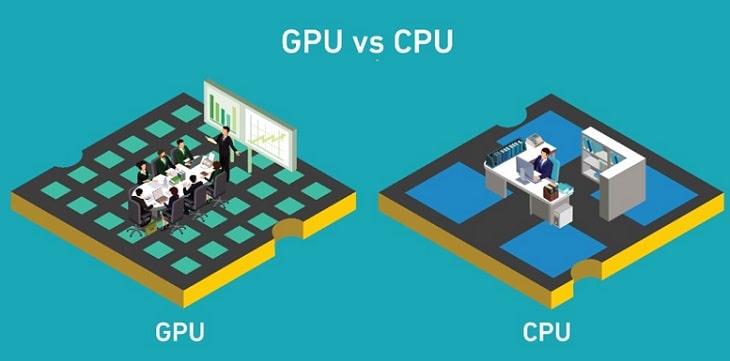

Simply because GPUs are better able to process data in parallel at the same time than CPUs!

As I said above, AI is inherently a guess from the input data source, so the amount of work that must be done is very “huge”, while it is not the same activity.

We have an example of AI that distinguishes colors: the input data are color characteristics, specifically its optical properties, for example.

When the hardware system records the color image in front, now thanks to what the sensor receives, the AI will begin to compare with the data it has => and proceed to give results. This way of working is similar to other AI systems.

Comparing with the example, it can be seen that the huge amount of work is the physical characteristics obtained on the camera or sensor, while the activity is just comparing with the data it has => then giving result.

Next, with today’s high-end graphics cards, Ram memory has a very large capacity and extremely high speed. It can be mentioned that the RTX 3090 graphics card is one of the TOP products on the market today with a capacity of 24GB GDDR6X, along with a Bus of: 384-bit and Bandwidth: 936 GBps.

This is a very impressive parameter, it ensures that large data is not delayed and everything is processed as quickly as possible.

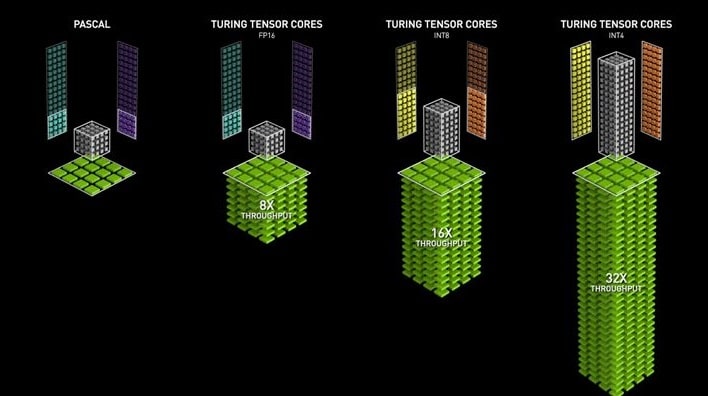

Another factor that cannot be ignored is the support from manufacturers in designing specialized cores inside the graphics card.

Most recently, Nvidia’s Tensor Core core is specialized for Deep Learning capabilities (a deeper branch of Machine Learning).

These improvements help machines learn in months, now only a few weeks according to what Nvidia announced. Saves a lot of time!

Yes, so through this article we have answered the question: Why are graphics cards often used for AI research more than CPUs?

However, many experts are experimenting with developing new command structures in AI research so that it can run better on CPUs, because according to them CPUs basically have better economic efficiency.

Okay, I would like to stop this article here. Thank you for taking the time to read this entire article and don’t forget to visit the Blog when you have free tim

Pradeep Sharma is a author the mind behind Techjustify, where I craft insightful blogs on technology, digital tools, gaming, AI, and beyond. With years of experience in digital marketing and a passion for tech innovation, I aim to simplify complex topics for readers worldwide.

My mission is to empower individuals with practical knowledge and up-to-date insights, helping them make informed decisions in the ever-evolving digital landscape.