How to Customize Robots.txt file on Blogger – platform fingers to prevent crawling of post or page data or images.

Therobots.txtfile is a file that must reside in the storage root of the domain; just to give an example if a site has the addressexample.comthen therobots.txtfile must have as URLexample.com /robots.txt.

This is a simple text file proposed way back in 1994 that quickly became a standard for all search engines.

Through therobots.txtfile, the site administrators give instructions to the crawlers who scan the pages of the domain.

You can enter instructions to make the enginesignore specific files, directories, or URLs.

The syntax ofrobots.txtfiles is standard and, in the event that such a file does not exist on a site, the engines will scan it in its entirety without exception.

The engines that follow therobots.txtstandard areAsk, AOL, Baidu, DuckDuckGo, Google, Yahoo! and YandexwhileBingis only partially compatible.

Therobots.txtfile is structured to indicate the name of the crawler and the name of the pages that areallowed or denied crawling.

The commands are essentially three:User-agent, AllowandDisallow. The first indicates the name of the crawler, the second indicates the pages that can be crawled and the third indicates the pages that cannot be crawled.

To indicate all crawlers we use the asterisk symbol (*) while to indicate all the pages of a domain we use the slash symbol (/). Let’s take a couple of examples. Arobots.txtfile that contains this text

User-agent: *

Allow: /

indicates thatall enginesareallowed to crawl the entire site. Instead thisrobots.txtfile

User-agent: *

Disallow: /

tellsall crawlers not to index anything on the site. For more complete information on how to create and customize therobots.txtfile, you can consult thisinstruction page from Google.

BLOGGER’S ROBOTS.TXT FILE

All domains of sites on theBloggerplatform , of the free typenomeblog.blogspot.comand with acustom domain,

Automatically have therobots.txtfile in the storage root or a blog with themyblog.blogspot.comdomain will have therobots.txtin the URLmyblog.blogspot.com / robots.txt.

Therobots.txt Bloggerhas this generic structure

User-agent: Pradeep -Google

Disallow:

User-agent: *

Disallow: / search

Allow: /

Sitemap: https: // nameblog.blogspot.com /sitemap.xml

where the last line is that of theSitemap, which obviously varies according to the domain of the site.

The first two lines allowGoogle’s advertising partners to crawl the entire site.The lines from the third to the fifth allow all crawlers to crawl the entire site, with the exception of the URLs with/ searchor thelabelpages,whichBloggerhas decided not to scan to avoid redundancy with the URLs of the posts. .

However, those who have a site with special needs can customize thisrobots.txtfile from theDashboard.

Go toSettings -> Search Preferences -> Crawler and Indexing -> Custom Robots.txt Fileand then clickEditnext toDisabled. CheckYesto customize the file.

ROBOTS.TXT FILE CUSTOMIZATIONS

1) Block a post or page URL to prevent crawling– Type these lines

Disallow: /2019/10/titolo-post1.html

Disallow: /p/name-page1.html

then go toSave Changes.

IMPORTANT:The lines must be added to those already present in the original file. This complete text must then be pasted into the test example.

User-agent: Pradeep -Google

Disallow:

User-agent: *

Disallow: / search

Allow: /

Disallow: /2019/10/titolo-post1.html

Disallow: /p/page-name1.html

Sitemap: https: // blogname .blogspot.com / sitemap.xml

Basically you have toadd the lines to the pre-existing ones.

2) Block the indexing of the images– To the initial text of the file it is necessary to add these lines:

User-agent: Googlebot-Image

Disallow: /

to have a final result like the following:

User-agent: Pradeep-Google

Disallow:

User-agent: *

Disallow: / search

Allow: /

User-agent: Googlebot-Image

Disallow: /

Sitemap: https://nomeblog.blogspot.com/sitemap.xml

After a change, open therobots.txtfile by pasting its URL into your browser to check that it is correct.

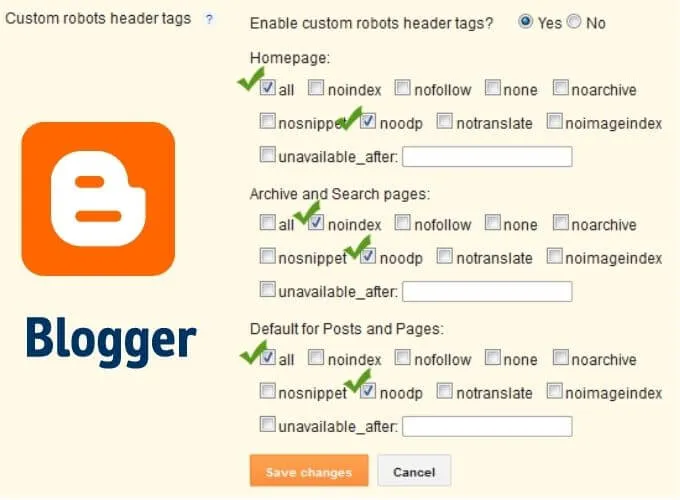

CUSTOMIZE THE ROBOT HEADER TAGS

Another system can also be used to customizerobots.txt. You always go toSettings -> Search Preferencesbut this time you chooseCustom Robot Header Tags -> Edit.

After checkingYes, we willsee options to act on. We can apply the tag filerobots.txtseparately to theHomepage, thepages of Archive and SearchandDefault Settings for Posts and Pages.

Instructions for using these tags can be found onthis page. Using these tags does not modify therobots.txtfile but presumably, it adds lines of codetotheHTMLof the pages. Since their use is not very clear, I recommend using them with caution.

We are always open to your problems, questions, and suggestions, so feel free to Comment on us by filling this. This is a free service that we offer, We read every message we receive.

Tell those we helped by sharing our posts with friends or just liking toFollow Instagram,Twitterand join ourFacebookPage or contact usGmail,Linkedin, etc